This post was originally published on this site

Automatic speech recognition technology has improved so much in the last couple of years that it can now create better captions than humans.

Trained on large language models, the artificial intelligence (AI) engines used to generate closed captioning for broadcast content are now reaching 98% accuracy levels with about three seconds latency for live captioning. In addition to being able to create transcripts of content, these products also offer translation capabilities. Captioning vendors are also automating operations to streamline the workflow.

These changes couldn’t come fast enough as trio of factors stack up to challenge live captioning. Fewer humans are becoming qualified stenographers, broadcasters are creating vastly more content that must be captioned and regulations are expected soon that will require captioning on all broadcast video content, no matter how it is distributed.

“Live captioning has been in need of some serious disruption for a while, and a lot of it is workflow-related,” Chris Antunes, 3Play Media co-founder and co-CEO, says. Stenographers are highly skilled but an “aging demographic.”

On the other hand, he notes, automatic speech recognition capabilities improve all the time, so much so that 3Play creates a State of Automatic Speech Recognition report each year and uses the top-performer as the base for its services.

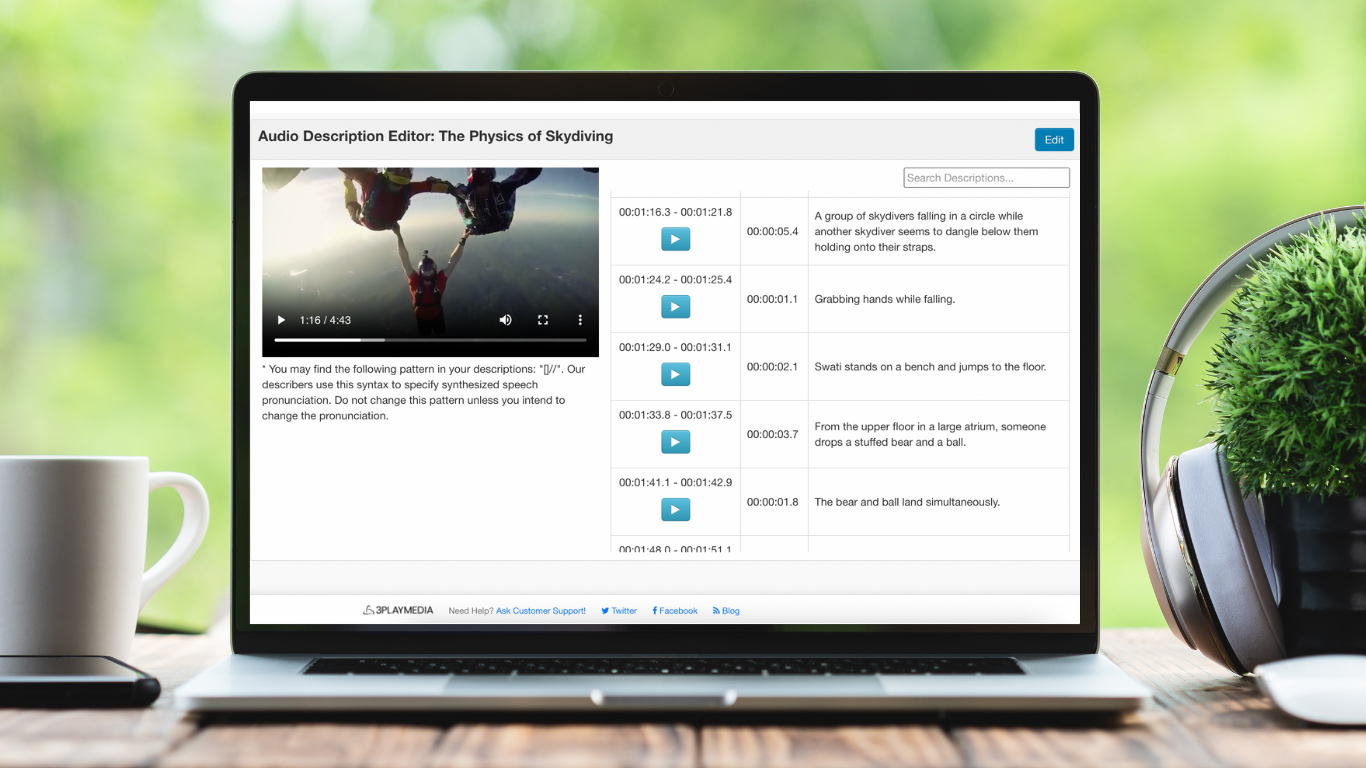

3Play Media’s Automatic Description Editor

More content than ever before is being created, and much of that content is not currently covered by captioning requirements. Regulators are poised to modernize captioning requirements, however, so